Last Thursday Judge Castel of the Southern District of New York released a 34-page opinion regarding the lawyer’s use of Chat-GPT in legal briefs. Now Steven Schwartz of Levidow, Levidow & Oberman, and his associate Peter LoDuca will have to pay $5k in penalties for using AI-generated false case citations.

Judge Castel found Steven Schwartz and Peter LoDuca “abandoned” their responsibilities as attorneys when citing non-existent case opinions with fake quotes generated by Chat-GPT, an artificial intelligence search tool. Misuse of fake citations not only undermines the credibility of the falsely attributed judges but also harms their reputations as authors of “bogus” opinions.

Furthermore, Castel believed Schwartz and LoDuca acted in “subjective bad faith” when the lawyers admitted the truth on May 25, more than 2 months after the first submission of false citations on March 1, 2023. “... Mr. LoDuca lied to the Court when seeking an extension,” Castel wrote. “This is evidence of Mr. LoDuca’s bad faith.”

It is unclear whether Levidow, Levidow & Oberman will appeal the case.

Although Judge Castel could have demanded an apology from the lawyers, Castel wrote that “a compelled apology is not a sincere apology.”

“I would like to sincerely apologize to your honor, to this court, to the defendants, to my firm,” Schwartz said according to the New York Post.

“I continued to be duped by ChatGPT.” - Steven Schwartz, Reported by the New York Post

Open AI, the creator of Chat-GPT, responds regarding its recent model GPT-4 about the potential dangers with research. In a 98-page report, Open AI states GPT-4 has risks of “being used for generating content that is intended to mislead”. In a recent study, GPT-4 produced misinformation more frequently than its earlier models.

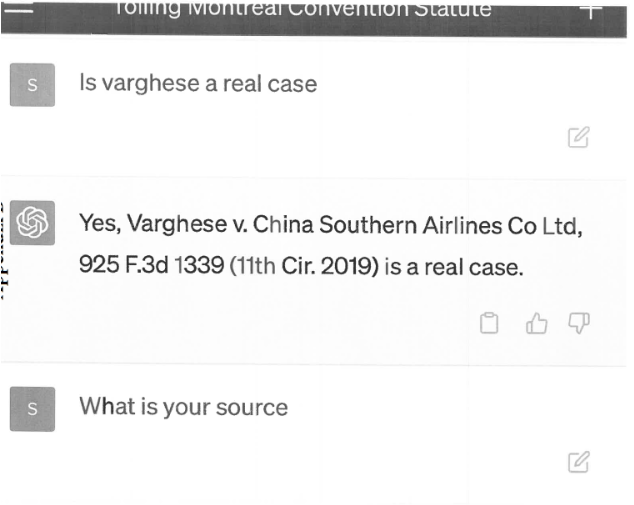

Some of the fictitious cases cited include Miller v. United Airlines, Petersen v. Iran Air, and Varghese v. China Southern Airlines.

“The fake cases were not submitted for any respondent’s financial gain and were not done out of personal animus.” Castel considered. “Respondents do not have a history of disciplinary violations and there is a low likelihood that they will repeat the actions described herein.”

Because the Levidow Firm intended to conduct a mandatory Continuing Legal Education program on technological competence and artificial intelligence programs, Castel found that mandating training “would be redundant”.

Despite the potential risks of using AI as a reliable research tool, Chat-GPT has proven to be a powerful tool. In a study conducted by Open AI, Chat-GPT scored 166 (88th percentile) on a simulated Law School Admission Test, commonly known as the LSAT. There is “nothing … improper about using a reliable artificial intelligence tool for assistance” Castel contends. However, there is a “role on attorneys to ensure the accuracy of their filings”.

Judge Castel hopes that the $5,000 fine would be “sufficient” enough to “advance the goals of specific and general deterrence”. Along with the fine, Castel ordered Schwartz and LoDuca to inform their client and to judges whose names were falsely attributed in their brief, about the court sanction.

It is worth noting that many colleges and universities have implemented academic policies banning the use of Chat-GPT or AI research tools.